By A Mystery Man Writer

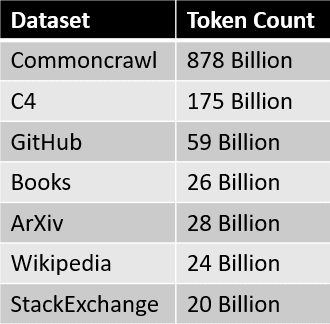

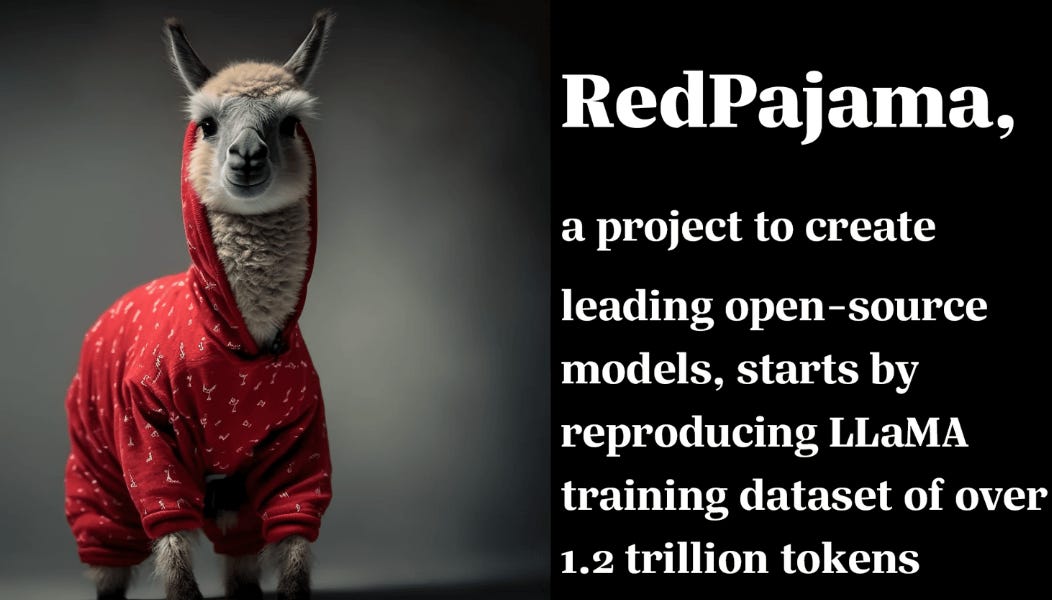

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

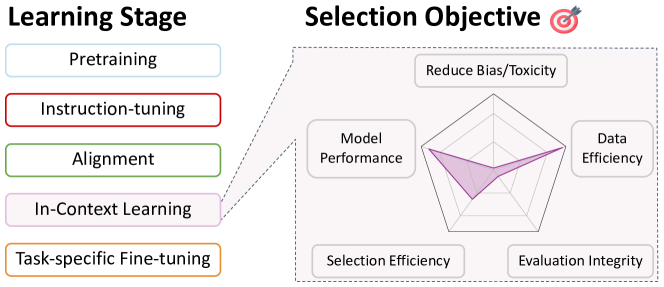

A Survey on Data Selection for Language Models

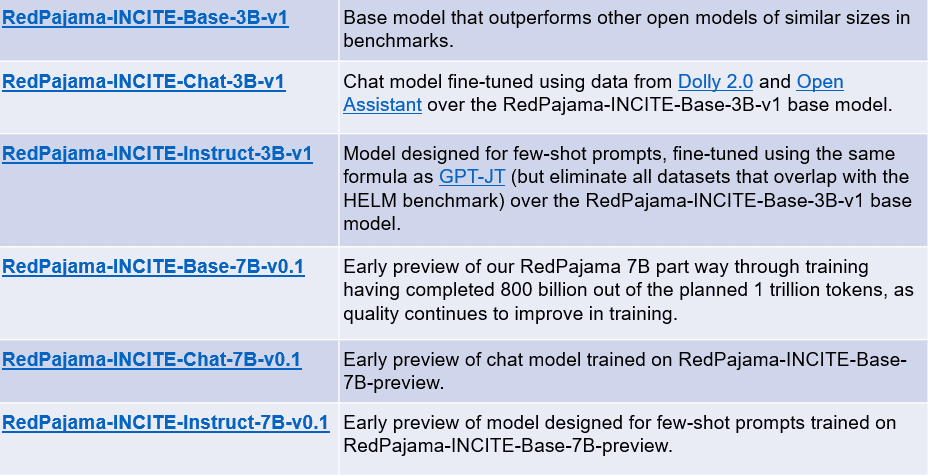

RedPajama Project: An Open-Source Initiative to Democratizing LLMs

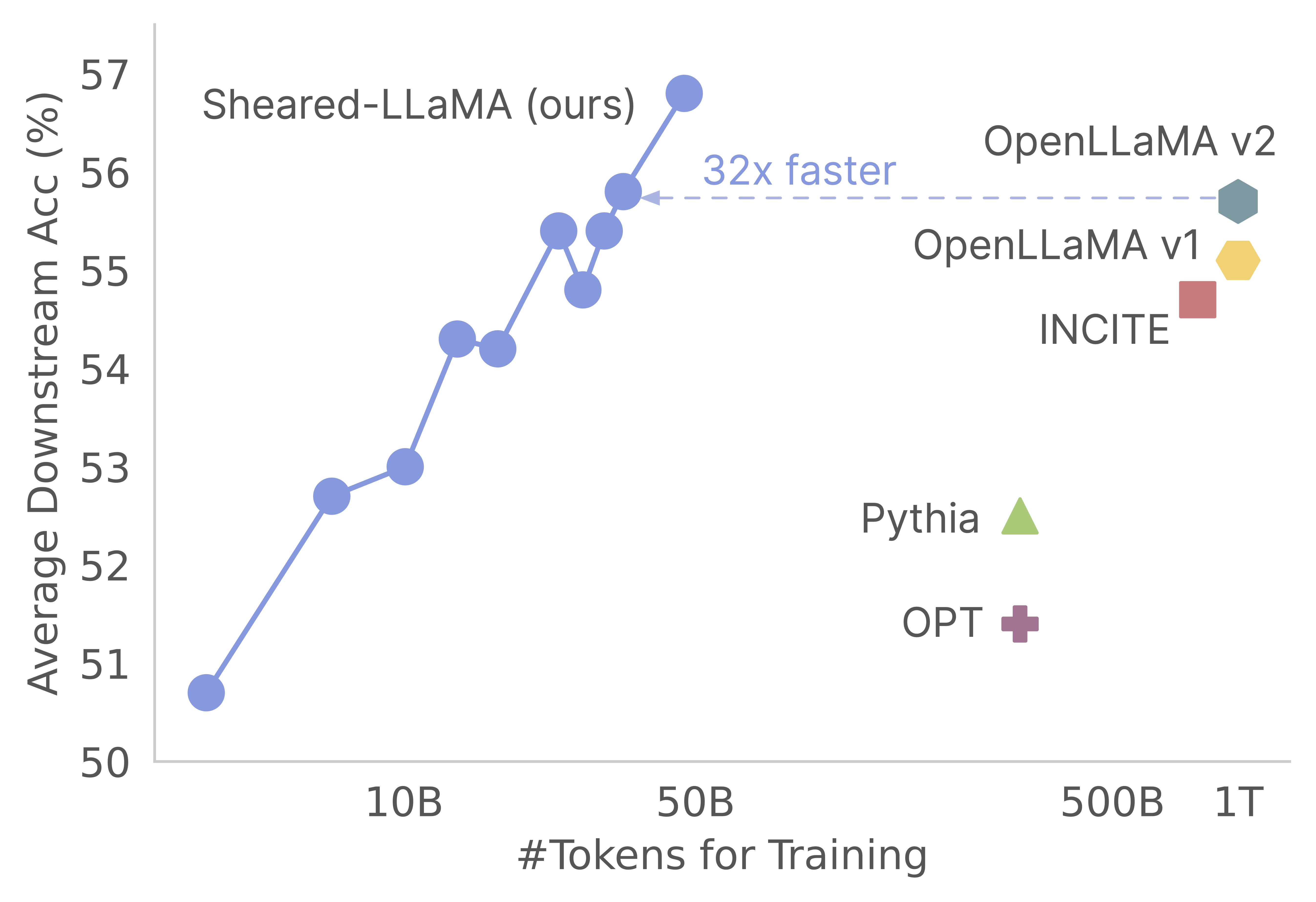

Sheared LLaMA: Accelerating Language Model Pre-training via

Open-Sourced Training Datasets for Large Language Models (LLMs)

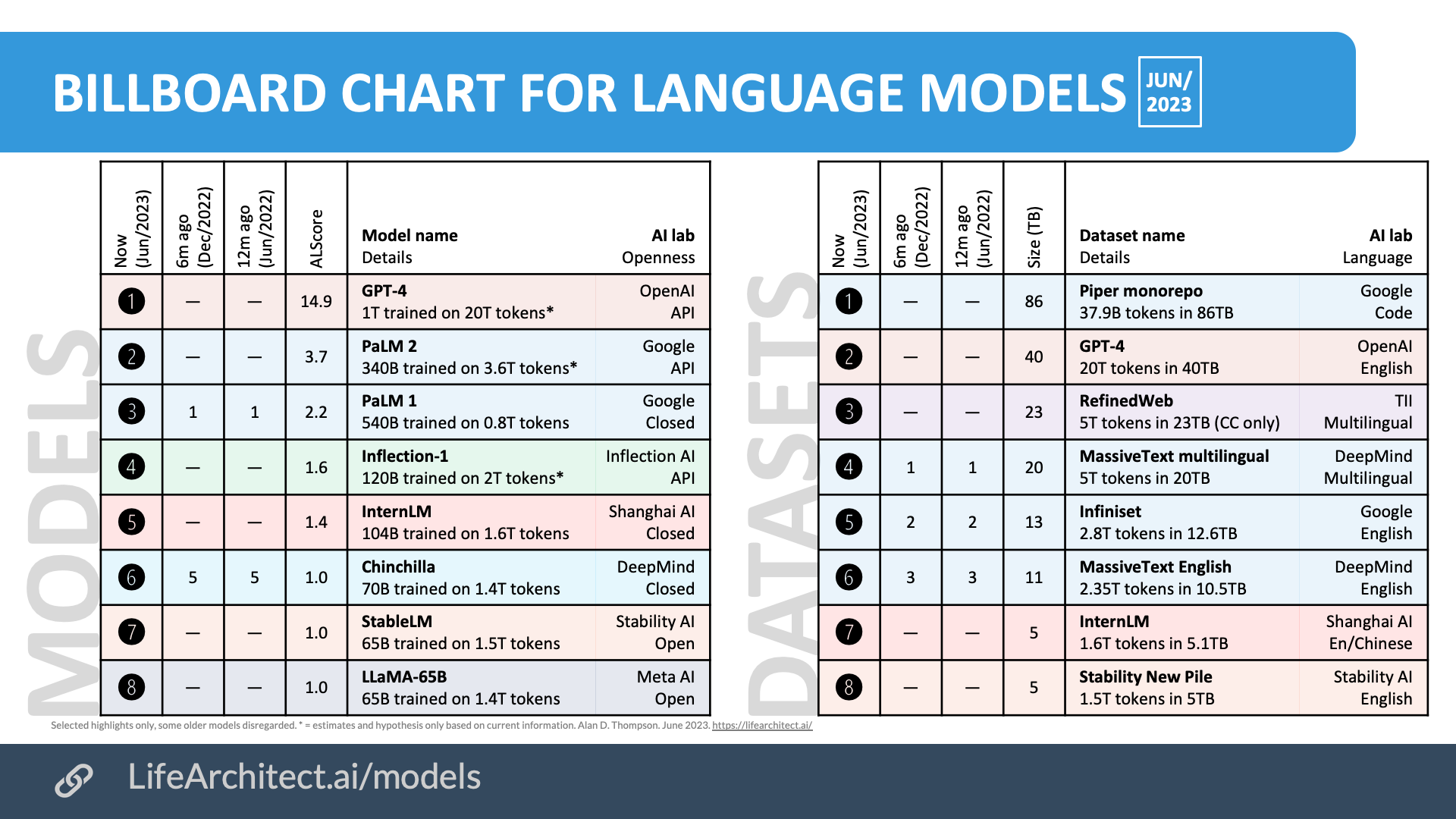

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson

What's in the RedPajama-Data-1T LLM training set

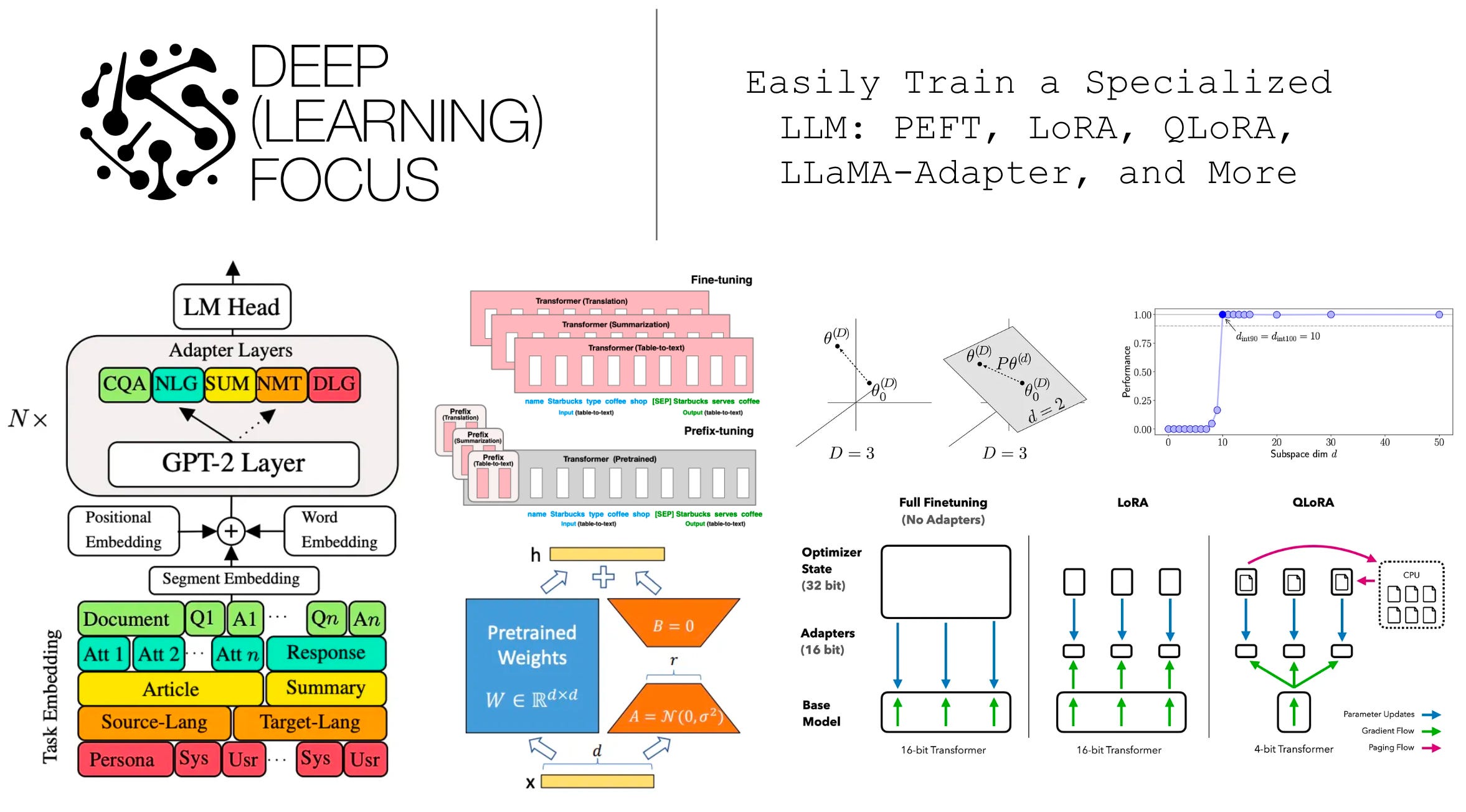

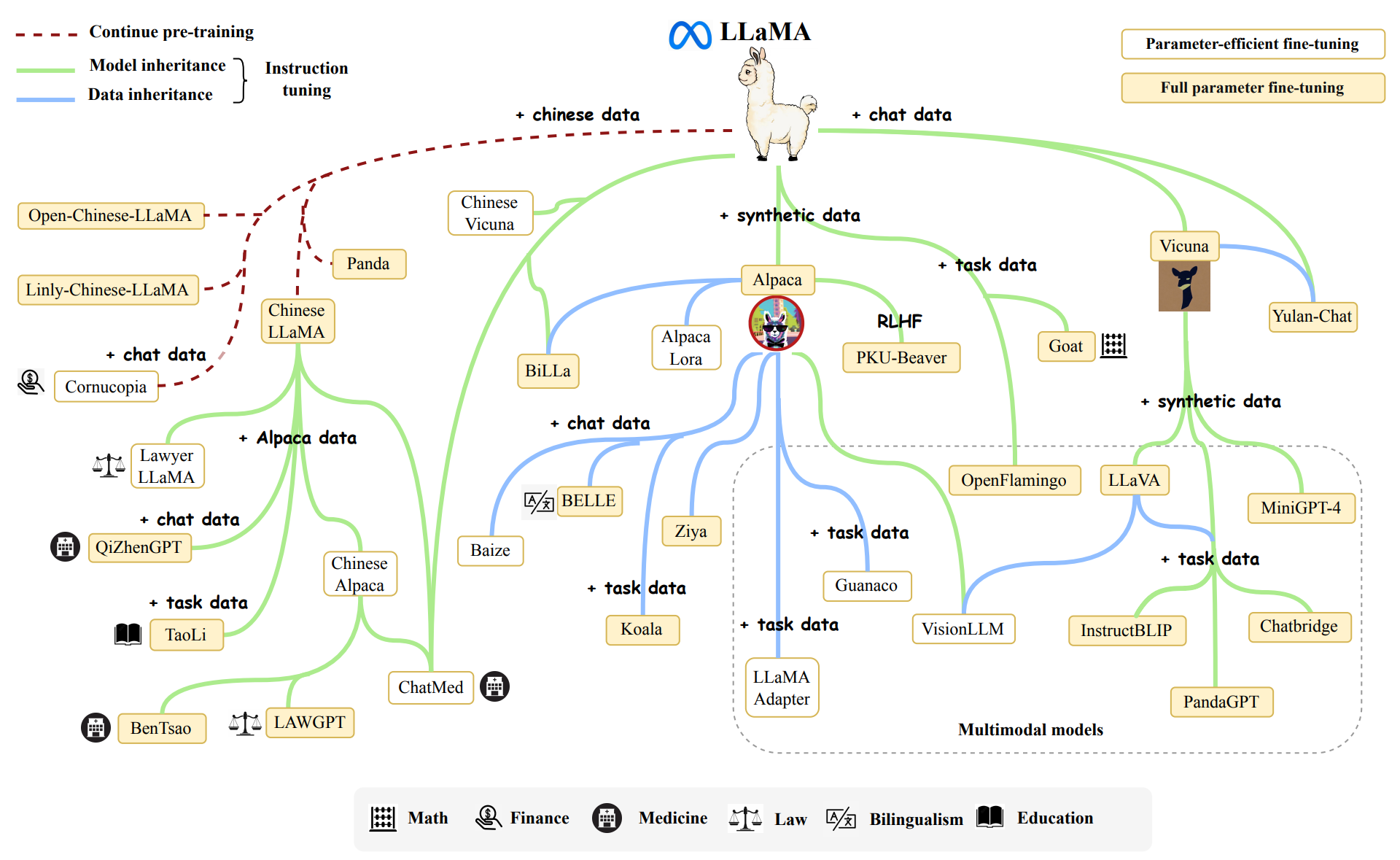

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

What is RedPajama? - by Michael Spencer

RedPajama Project: An Open-Source Initiative to Democratizing LLMs

Inside language models (from GPT to Olympus) – Dr Alan D. Thompson