Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

maddes8cht/mosaicml-mpt-30b-chat-gguf · Hugging Face

The History of Open-Source LLMs: Better Base Models (Part Two), by Cameron R. Wolfe, Ph.D.

.png)

Train Faster & Cheaper on AWS with MosaicML Composer

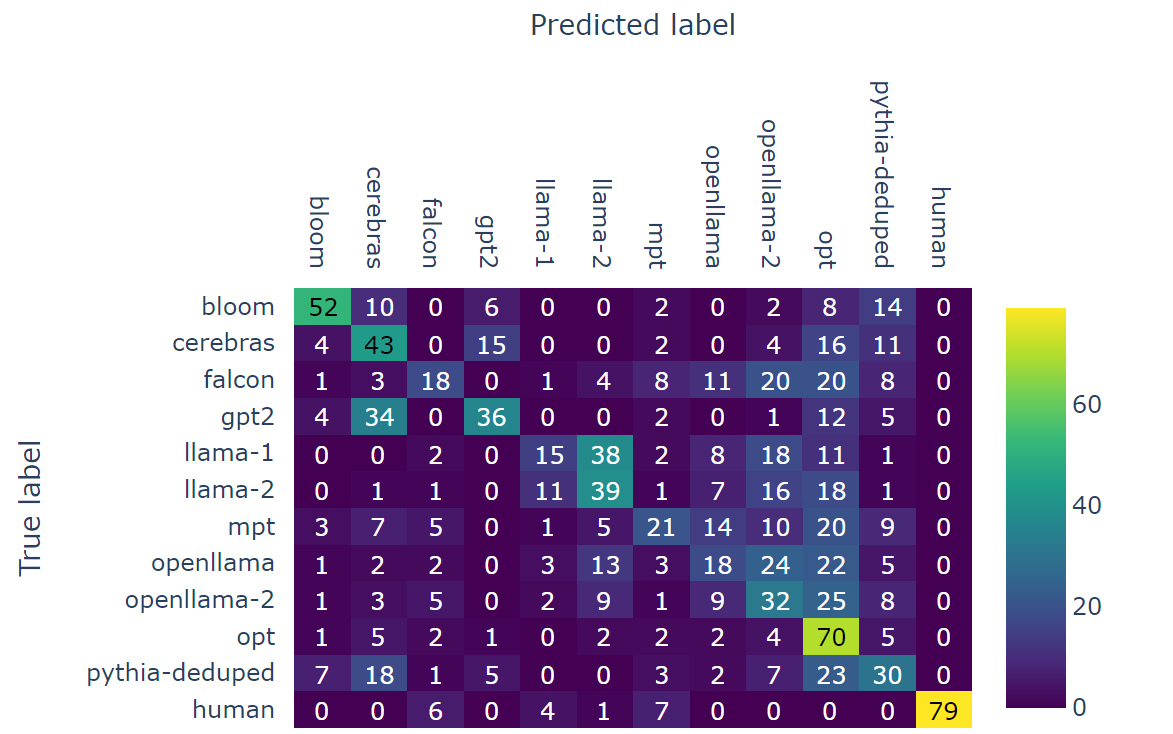

2309.13322] From Text to Source: Results in Detecting Large Language Model-Generated Content

MosaicML Just Released Their MPT-30B Under Apache 2.0. - MarkTechPost

Guide Of All Open Sourced Large Language Models(LLMs), by Luv Bansal

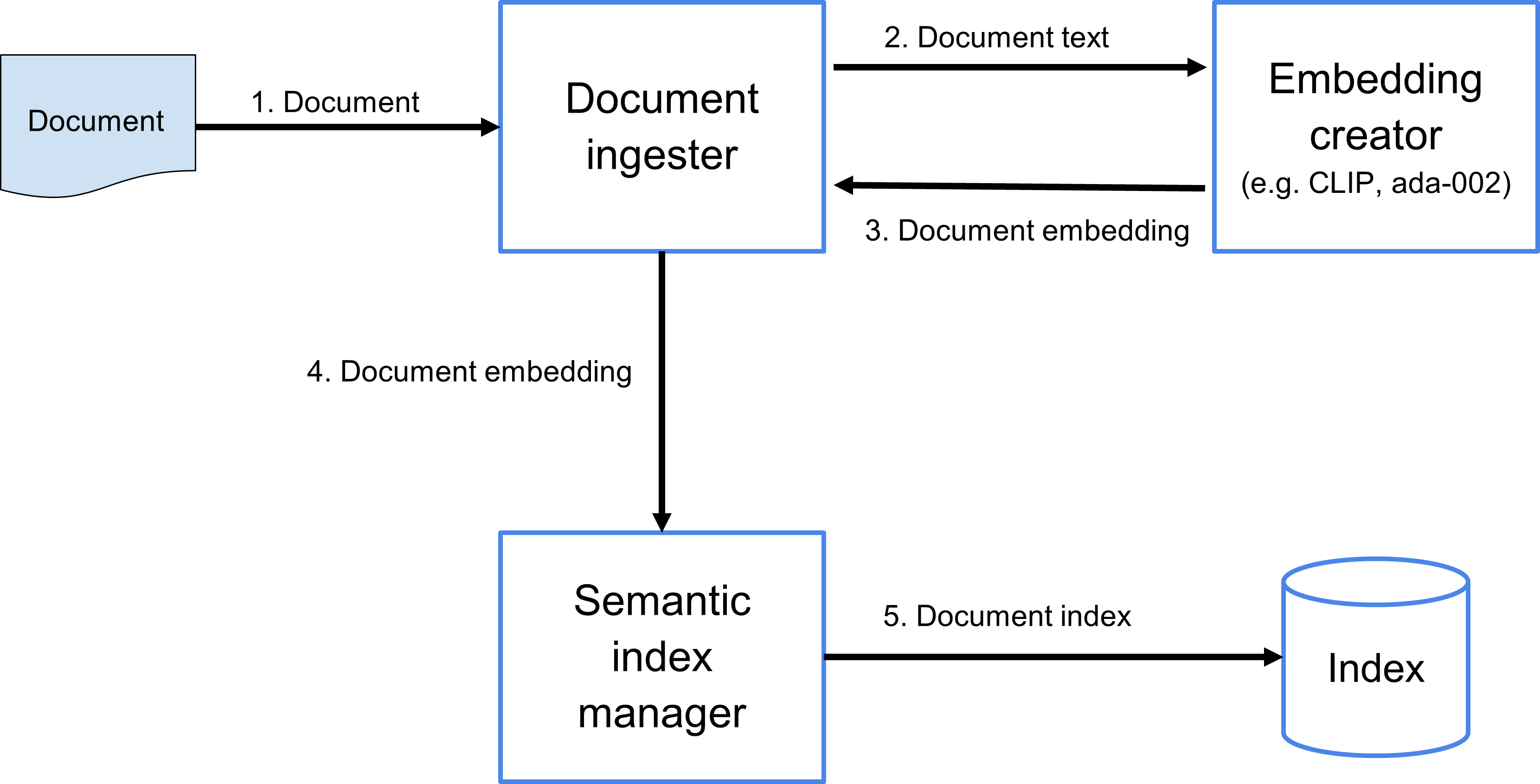

The Code4Lib Journal – Searching for Meaning Rather Than Keywords and Returning Answers Rather Than Links

MPT-30B: Raising the bar for open-source foundation models

The History of Open-Source LLMs: Better Base Models (Part Two)