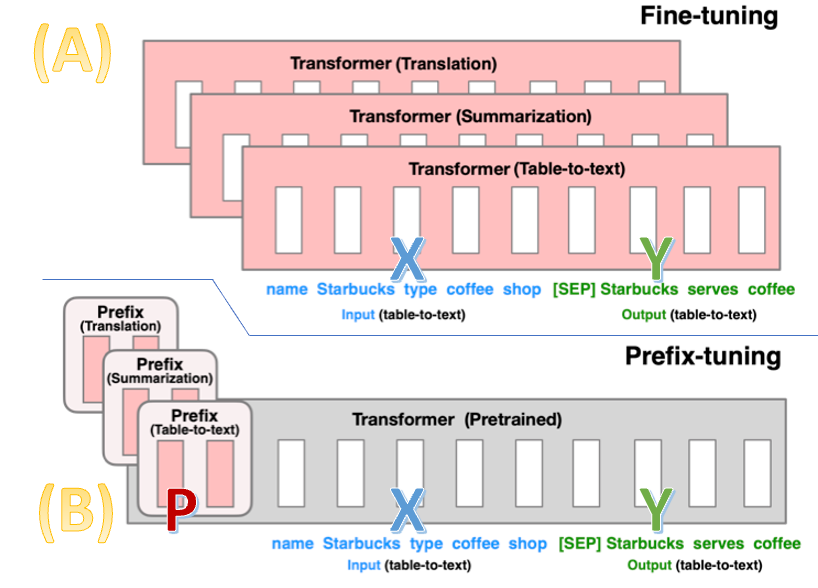

In this and the next posts, I will walk you through the fine-tuning process for a Large Language Model (LLM) or a Generative Pre-trained Transformer (GPT). There are two prominent fine-tuning…

A Tutorial on the Open-source Lag-Llama for Time Series

Greg Brockman on X: You can now fine-tune GPT-3 on your own data

Fine-tuning a GPT — LoRA. This post explains the proven…

List: Advances in AI/ML, Curated by Fakhri Karray

Fine-tuning GPT3.5 with the OpenAI API

GenAI model evaluation metric — ROUGE

List: GenAI, Curated by Anthony Stevens

The data that those large language models were built on

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

Fine Tune GPT Models Using Lit-Parrot by Lightening-AI